The Silent Chauffeur: How Exactly Do Self-Driving Cars Work?

We see them on the news and in our feeds, but how do autonomous cars actually see the world and make decisions? Let's peek under the hood of full self-driving technology.

Honestly, the first time I saw a car navigating a busy street with the driver's hands completely off the wheel, it felt like watching a magic trick. For years, the idea of a fully autonomous car was pure science fiction, something you'd see in I, Robot or Minority Report. Yet, here we are, living in an era where this technology is no longer a distant dream but an emerging reality. It’s one of those technological leaps that forces you to stop and just marvel at how far we've come. It’s not just about convenience; it’s a fundamental shift in our relationship with transportation, and maybe even with the very concept of control.

But beyond the "wow" factor, a very real question pops into my head, and maybe yours too: how does it actually work? It’s easy to imagine a super-smart computer is at the helm, but the reality is a breathtakingly complex and elegant dance between hardware and software. The car isn't just "driving"; it's perceiving, thinking, and reacting in a continuous, high-speed loop. Understanding this process isn't just for engineers or tech geeks anymore. As these vehicles become more common, knowing the basics of how they operate is becoming essential for all of us who share the road. So, let's take a friendly dive into the incredible world of autonomous driving technology.

The Eyes of the Machine: A Symphony of Sensors

Before a self-driving car can make a single decision, it needs to understand its environment with superhuman precision. This is where a suite of sophisticated sensors comes in, acting as the car's eyes and ears. It’s not just one type of sensor, but a team of them, each with its own strengths, working together to build a complete, 360-degree picture of the world. This concept, known as sensor fusion, is critical because it creates redundancy and covers the weaknesses of any single sensor type.

The most famous of these sensors is probably LiDAR (Light Detection and Ranging). It works by spinning around and shooting out millions of laser beams per second. When these beams hit an object, they bounce back, and the sensor measures the time it took for the return journey. This allows it to create an incredibly detailed and accurate 3D map of its surroundings. Think of it as the car having a constant, high-definition 3D model of the world around it. It’s amazing for detecting the precise shape, size, and distance of everything from other cars to pedestrians and cyclists.

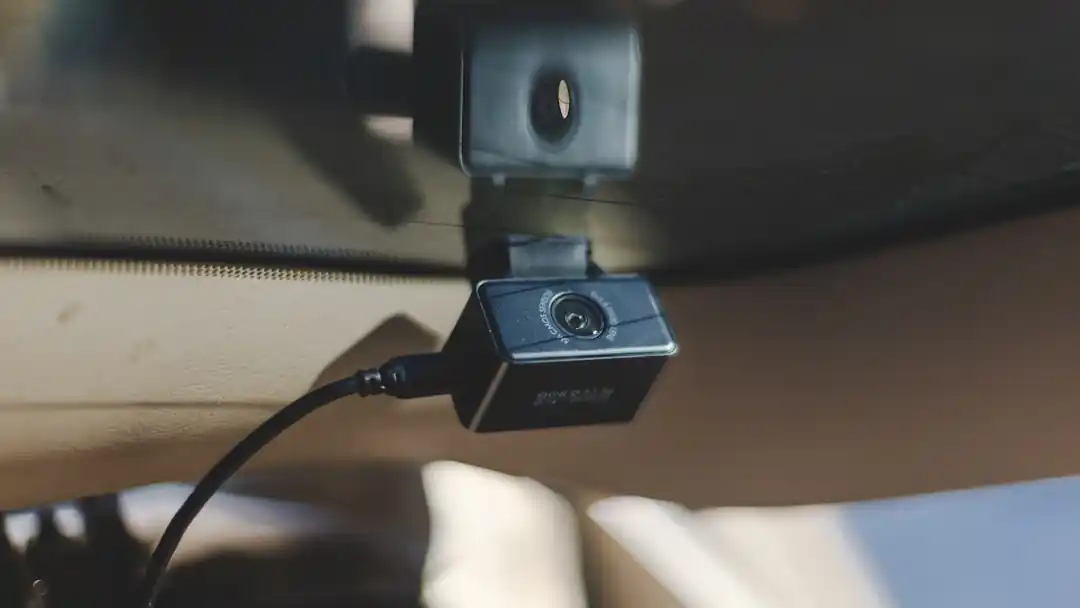

Alongside LiDAR, you have radar and cameras. Radar uses radio waves to detect objects and is particularly brilliant at measuring their speed, making it essential for things like adaptive cruise control. A huge advantage of radar is that it works well in bad weather like rain, snow, or fog, conditions where other sensors might struggle. Cameras, the most human-like of the sensors, provide the rich visual context that LiDAR and radar can't. They are essential for reading road signs, identifying traffic light colors, and understanding the subtle visual cues of a complex urban environment. Finally, ultrasonic sensors, typically found in the bumpers, use sound waves to detect objects at very close range, making them perfect for parking maneuvers.

The Digital Brain: Perception, Planning, and Action

All of that rich data from the sensors would be useless without a powerful "brain" to make sense of it all. This is where the magic of artificial intelligence and machine learning comes into play. The car's central computer runs incredibly complex software that takes the torrent of information from the sensor suite and turns it into a coherent understanding of the world. This process is often broken down into a few key steps: perception, planning, and action.

The perception stage is all about answering the question, "What's around me?" The AI uses deep learning models, specifically neural networks that have been trained on millions of miles of driving data, to identify and classify objects. It learns to distinguish between a pedestrian and a lamppost, a bicycle and a motorcycle, a stop sign and a red balloon. It doesn't just see objects; it tracks their velocity and predicts their likely path. Is that pedestrian about to step into the street? Is that car signaling to merge into my lane? The system is constantly making these predictions.

Once the car has a solid understanding of its environment, it moves to the planning stage. Here, the AI plots the safest and most efficient path forward. It considers everything from the speed limit and traffic laws to the comfort of the passengers. It's a constant process of re-evaluation. If a car ahead suddenly brakes, the system immediately recalculates a new plan. Finally, the action stage is where the digital commands become physical reality. The computer sends precise electrical signals to the car's actuators, which control the steering, acceleration, and braking. This entire sense-plan-act loop happens many times per second, allowing the car to react to a changing world with incredible speed and precision.

The Road Ahead: Levels of Autonomy and Future Hurdles

The journey to a fully driverless world isn't an all-or-nothing proposition. The Society of Automotive Engineers (SAE) has defined six levels of automation, from Level 0 (a completely manual car) to Level 5 (a car that can drive itself anywhere, anytime, under any conditions). Many new cars today come with Level 1 or Level 2 features, like adaptive cruise control or lane-keeping assist. These are driver assistance systems, meaning the human is still fully in charge. The real leap happens at Level 3 and above, where the car takes over the driving task under specific conditions.

We are currently in a fascinating and messy transition period, with some companies deploying Level 3 and even Level 4 (full self-driving in a limited geographical area) systems. However, the road to a true Level 5 future is still paved with significant challenges. Public trust and safety are, rightly, the biggest hurdles. Every accident involving an autonomous vehicle is heavily scrutinized and can set back public perception. Engineers are working tirelessly to build systems that are not just as good as a human driver, but demonstrably safer.

Then there are the regulatory and legal gray areas. Who is at fault in an accident involving a self-driving car? The owner? The manufacturer? The software developer? Our laws were written for human drivers, and the legal system is still catching up. And let's not forget the "edge cases"—the nearly infinite number of weird, unpredictable situations that can happen on the road. A flock of birds suddenly taking off, a couch falling off a truck, a construction zone with a human flagger giving confusing signals. Training an AI to handle every conceivable scenario is an immense task. But with every mile driven and every piece of data collected, the system gets a little bit smarter, bringing that science-fiction future one step closer to our daily reality.

You might also like

Beyond 'Everyone': A Small Business Guide to Market Segmentation

Feeling like your marketing is just shouting into the void? Let's talk about how getting specific with market segmentation can change everything for your small business.

From Dust to Doorstep: The Real Stages of a Housing Development

Ever drive by a new neighborhood and wonder how it all came to be? It's a far more complex and fascinating journey than you might imagine, a marathon of vision, planning, and sheer grit.

The Entrepreneur's Roadmap to Funding: From a Napkin Sketch to a Billion-Dollar Valuation

Ever wonder how startups go from a simple idea to ringing the bell at the stock exchange? It's a long, winding road paved with different stages of funding. Let's walk through it together.

The One Real Estate Metric You Can't Afford to Ignore: Understanding Cap Rate

Diving into real estate? The cap rate is the first number you need to understand. Let's break down what it is, how to use it, and why it's so crucial for making smart investment decisions.

Don't Get Lowballed: Key Tactics for Negotiating with Insurance After a Car Accident

That first call from the insurance adjuster can be intimidating. But with the right strategy, you can navigate the negotiation and secure a fair settlement. Here’s how to prepare.