Your First Steps in the Cloud: Getting Started with AWS SageMaker for AI

Dipping your toes into the world of cloud-based AI can feel intimidating. But what if I told you there's a toolkit designed to make it feel less like a mountain and more like a guided trail? Let's talk about AWS SageMaker.

Let's have a real talk. The term "Artificial Intelligence" gets thrown around so much it can feel both exciting and incredibly overwhelming. You have the ambition, you've seen the cool projects, and you're ready to build something of your own. But then you hit the first wall: the setup. The servers, the frameworks, the dependencies, the scaling—it’s a lot. Honestly, it’s enough to make anyone want to just close the laptop and go for a walk. I've been there.

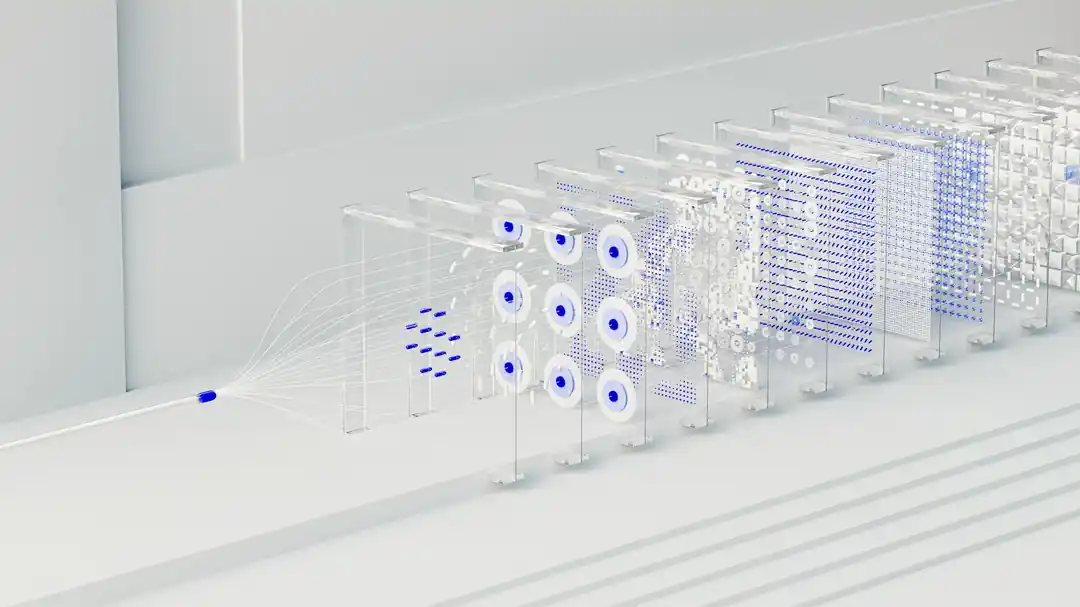

This is the exact moment where a tool like Amazon SageMaker comes into the picture. I used to think of it as this monolithic, experts-only service, but the more I've used it, the more I see it as a powerful ally for developers at any level. It’s a fully managed service from Amazon Web Services (AWS) that’s designed to take the pain out of the machine learning (ML) process. Instead of you wrestling with infrastructure, SageMaker handles the heavy lifting, letting you focus on the part you actually care about: building and training your models.

Think of it less as a single tool and more as a comprehensive workbench. It provides a unified space for everything from data labeling and preparation to model training, tuning, and deployment. For a beginner, this is a godsend. It means you can experience the entire end-to-end ML workflow without needing a PhD in DevOps. It’s about lowering the barrier to entry, and frankly, making AI development fun again.

So, What Is SageMaker, Really?

Before we jump into the "how-to," let's break down what SageMaker actually is. At its heart, it’s a collection of tools and services that you can pick and choose from, all designed to streamline the ML lifecycle. You aren't forced into one rigid workflow; you can use the parts that make sense for your project.

The most common starting point for many is SageMaker Studio. This is a web-based integrated development environment (IDE) for machine learning. Imagine having one place where you can write your code in notebooks, compare experiment results, debug your training jobs, and monitor your deployed models. It brings a sense of order to the often-chaotic process of ML development. I read on the AWS site that it's designed to be the "first fully integrated development environment for machine learning," and honestly, it feels that way. It’s your command center.

Within Studio, or as a standalone service, you'll use SageMaker Notebooks. If you've ever used a Jupyter notebook, you'll be instantly familiar with this. These are managed notebook instances, pre-configured with all the popular data science libraries and ML frameworks (like TensorFlow, PyTorch, and scikit-learn). The magic is that AWS manages the underlying server. Need more power for a heavy computation? You can change the instance type with a few clicks. It’s that simple.

Once you've explored your data and written your model code, you'll use SageMaker Training Jobs. This is where the platform truly shines. You point SageMaker to your dataset (usually stored in an Amazon S3 bucket), select your script and the type of compute resources you need, and SageMaker spins up an isolated environment to train your model. It handles everything—provisioning the servers, running the code, and then tearing it all down when it's done, so you only pay for what you use. This is a massive step up from running a training script on your local machine for hours (or days).

Your First Steps: Setting Up the Environment

Alright, ready to get your hands dirty? The first thing you need is an AWS account. If you don't have one, you can sign up on the AWS website. They have a Free Tier that includes a certain amount of SageMaker usage for the first two months, which is perfect for learning.

Once you're in the AWS Management Console, just type "SageMaker" into the search bar. This will take you to the SageMaker dashboard. The most straightforward way to get started is by launching SageMaker Studio. You'll be prompted to set up a "Domain," which is essentially your collaborative ML environment. You'll create a user profile and then, with a single click, you can launch the Studio environment.

The first time Studio loads, it might take a few minutes to configure everything, so it's a good time to grab a coffee. Once it's up, you'll be greeted by a familiar JupyterLab-like interface. From here, you can launch a new notebook, a terminal, or an image builder. The easiest way to start is by creating a new notebook. You'll be asked to select a "kernel," which is the engine that runs your code. Choose one of the Python-based options, and you're ready to write your first lines of code in the cloud.

A crucial piece of advice I wish I had at the start: pay attention to the instance types. Your notebook runs on a specific virtual server (an "instance"). For just writing code and light data exploration, a smaller instance like ml.t3.medium is perfectly fine and cost-effective. Don't start with a massive, expensive GPU instance unless you absolutely need it. You can always change it later.

The Core Workflow: From Notebook to Deployed Model

Let's walk through a simplified, high-level workflow. This is the journey your project will likely take within SageMaker.

First, Data Preparation. Your data needs to live somewhere SageMaker can access it, and that place is almost always Amazon S3 (Simple Storage Service). You'll create an "S3 bucket" (think of it as a folder in the cloud) and upload your dataset there. In your SageMaker notebook, you'll write code to pull this data, clean it, and prepare it for training. This is where you'll spend a good chunk of your time, using libraries like Pandas and NumPy to get your data into shape.

Second, Training. Once your data is ready and your model script is written, you'll configure a SageMaker Training Job. You can do this directly from the notebook using the SageMaker Python SDK. You'll specify the location of your training data in S3, the instance type you want to use for training (this is where you might want a more powerful instance), and the location where you want to save the trained model. When you execute the job, SageMaker takes over, and you can even close your notebook. The training happens independently, and you can monitor its progress from the Studio UI.

Third, Deployment. This is the magic moment. After a training job completes successfully, you have a trained model artifact saved in S3. With another simple command in your notebook, you can deploy this model to a SageMaker Endpoint. This creates a secure, scalable API. Your application can then send new data to this API endpoint and get back real-time predictions from your model. SageMaker handles all the complexities of hosting, scaling, and securing this endpoint for you.

This three-step process—prepare, train, deploy—is the fundamental rhythm of working in SageMaker. Mastering this flow is the key to unlocking its power.

Don't Go It Alone: Use the Built-in Examples

One of the best, and sometimes overlooked, features of SageMaker is its rich library of sample notebooks. When you first open SageMaker Studio, you'll see an option to browse examples. These are fully functional, end-to-end notebooks that cover a huge range of use cases, from simple linear regression to advanced computer vision and natural language processing.

I cannot recommend this enough: start with an example. Find one that sounds interesting to you, open it, and run it cell by cell. Read the comments, see how the SageMaker SDK is used, and watch the workflow unfold. This is, without a doubt, the fastest way to understand how the different parts of SageMaker connect. You can see how data is pulled from S3, how a training job is configured, and how a model is deployed.

Once you've run through an example, you can start modifying it. Swap out the sample dataset with your own. Tweak the model's hyperparameters. Try deploying it to a different type of instance. This hands-on approach is far more effective than just reading documentation. It builds muscle memory and gives you the confidence to start your own project from a blank notebook.

The journey into AI is a marathon, not a sprint. Tools like AWS SageMaker are designed to be your running partners, taking care of the tedious logistics so you can focus on the path ahead. So open up that console, launch a notebook, and start building. The cloud is waiting for you.

You might also like

Cracking the Code: How to Build a Film with the Three-Act Structure

Ever wonder what makes your favorite movies just *work*? It’s not magic, but a timeless storytelling blueprint you can learn, too.

The Art of Packing Light: Your Guide to a Southern Summer Vacation

Heading to the southern U.S. this summer? Let's talk about how to pack smart for the heat, humidity, and ever-present air conditioning. It's easier than you think!

Beyond the Postcards: Unearthing Arizona's True Hidden Gems

Arizona is so much more than its famous canyons. Join me on a journey to discover the quiet, breathtaking corners of the Grand Canyon State that often get overlooked.

Don't Get Burned: Essential Desert Hiking Safety Tips for Phoenix Trails

The Sonoran Desert is stunning, but it demands respect. Before you hit the trails near Phoenix, here are the life-saving tips you absolutely need to know.

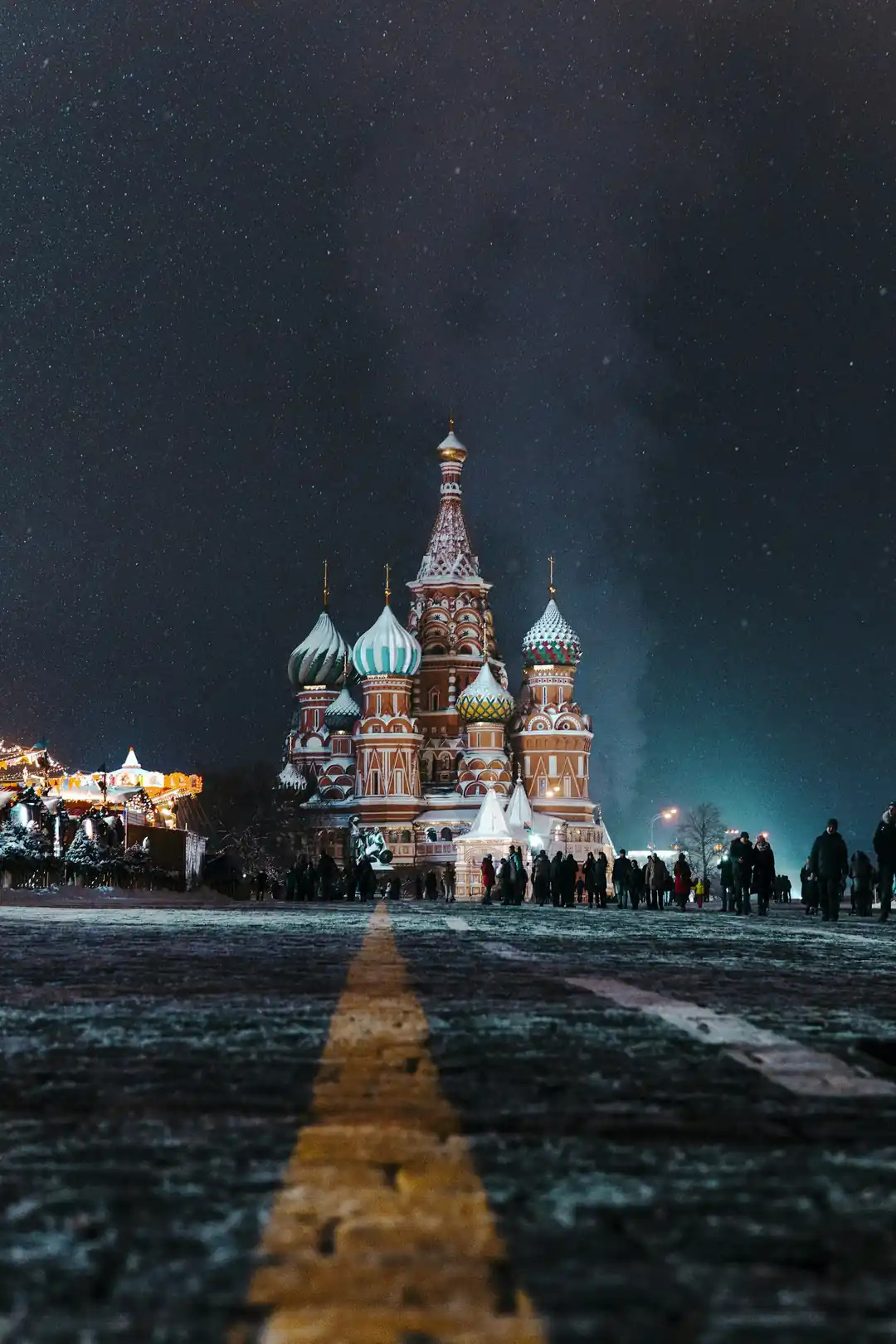

Moscow's Timeless Echoes: A Journey Through History's Grandest Stages

Ever wondered what it feels like to walk through centuries of history? Join me as we explore Moscow's most iconic historical sites, where every cobblestone tells a story.