The Ghost in the Machine: How AI Actually Learns to Write

Ever wonder how AI can write an email, a poem, or even a news article? It's not magic. Let's pull back the curtain on how Large Language Models really work.

It’s a strange feeling, isn't it? You’re reading an article, a marketing email, or maybe just a surprisingly witty social media comment, and a thought pops into your head: Did a human actually write this? Honestly, it’s a question I find myself asking more and more. This sudden explosion of human-like text isn’t a sci-fi movie plot coming to life; it’s the work of Large Language Models, or LLMs, the powerful engines driving the content generation revolution.

For a lot of us, the speed at which this technology has developed feels like it came out of nowhere. One minute, AI was a clunky chatbot, and the next, it’s drafting legal documents and writing poetry. It’s easy to feel a bit of whiplash and maybe even a little intimidated. But the "magic" behind it all is actually a fascinating story of data, architecture, and some incredibly clever math.

Understanding how these models work doesn't just satisfy a curiosity. It helps you use them better, appreciate their strengths, and recognize their very real limitations. So, let's go on a little journey together and demystify the ghost in the machine.

What's Under the Hood? A Super-Powered Autocomplete

At its core, you can think of a Large Language Model as the most sophisticated autocomplete you’ve ever seen. It’s a predictive engine, but instead of just suggesting the next word in your text message, it predicts the next word in a sequence based on a deep, contextual understanding of the billions of sentences it has been trained on. It’s not "thinking" in the human sense; it's making a highly educated guess.

The foundational technology that made today's powerful LLMs possible is an innovation called the Transformer architecture. Before the Transformer, which was introduced by Google researchers in 2017, models struggled to keep track of context in long pieces of text. They would "forget" what was said at the beginning of a paragraph by the time they got to the end.

The Transformer changed everything with a mechanism called "self-attention." This allows the model to weigh the importance of different words in the input text and decide which ones are most relevant to generating the next word. It can look backward and forward across the text to build a much richer understanding of the context, which is why its output feels so much more coherent and, well, human.

The Training Regimen: A Digital Brain's Education

So, how does an LLM get so smart? It goes to school, in a sense. A very, very big school. LLMs are trained on absolutely massive datasets—we're talking a significant portion of the public internet. This includes colossal archives of text and code, like the Common Crawl dataset, which is a snapshot of billions of web pages, as well as huge collections of books and, of course, Wikipedia.

During this training phase, the model isn't being told what's right or wrong. It's simply processing trillions of words and learning the patterns, relationships, grammar, facts, and even the stylistic nuances of human language. It learns that "queen" is related to "king" and that Python code looks different from a Shakespearean sonnet. All this learned knowledge is stored in the model's "parameters," which can number in the hundreds of billions for the most advanced models.

This is also where the inherent biases of AI come from. Because the models are trained on a snapshot of the internet—with all of its brilliance, flaws, and prejudices—they inevitably learn and can replicate those biases in their output. It’s a stark reminder that these tools are a reflection of the data we feed them, not objective arbiters of truth.

From Prompt to Prose: The Creative Process

Once the model is trained, how does it actually create content from your prompt? The process is both simple and incredibly complex. When you type in a request, the model first breaks your words down into smaller pieces called "tokens." These tokens are then converted into numerical representations that the model can understand.

From there, the prediction engine kicks in. Based on your input and its vast training, the model calculates the probability for what the very next token should be. It picks the most likely one, adds it to the sequence, and then does the entire process over again. It looks at the original prompt plus the new token it just added, and predicts the next one.

This word-by-word (or token-by-token) generation is what allows for such a wide variety of outputs. By guiding the model with a well-crafted prompt, you are essentially setting the starting point and direction for this chain of predictions. A slight change in your prompt can send the model down a completely different predictive path, resulting in a different tone, style, or outcome. It’s less like giving an order and more like steering a ship.

A New Tool, Not a New Author

It’s completely normal to feel a mix of awe and apprehension about this technology. The outputs can be so impressive that we sometimes forget what they are: incredibly advanced pattern-matching systems. They don't have beliefs, intentions, or a soul. They don't "know" things; they predict what word should come next based on the data they've seen.

This is why the human element is more important than ever. An LLM can generate a blog post, but a human writer is needed to ensure its accuracy, inject genuine emotion, and connect it to a real, lived experience. An LLM can draft an email, but a human touch is what makes it feel personal and sincere.

Thinking of these models as collaborators rather than replacements is, I think, the healthiest way forward. They are powerful tools that can augment our own creativity and handle the heavy lifting of a first draft, leaving us to do what we do best: refine, contextualize, and infuse the work with a spark of our own humanity. And that’s a future I find genuinely exciting.

You might also like

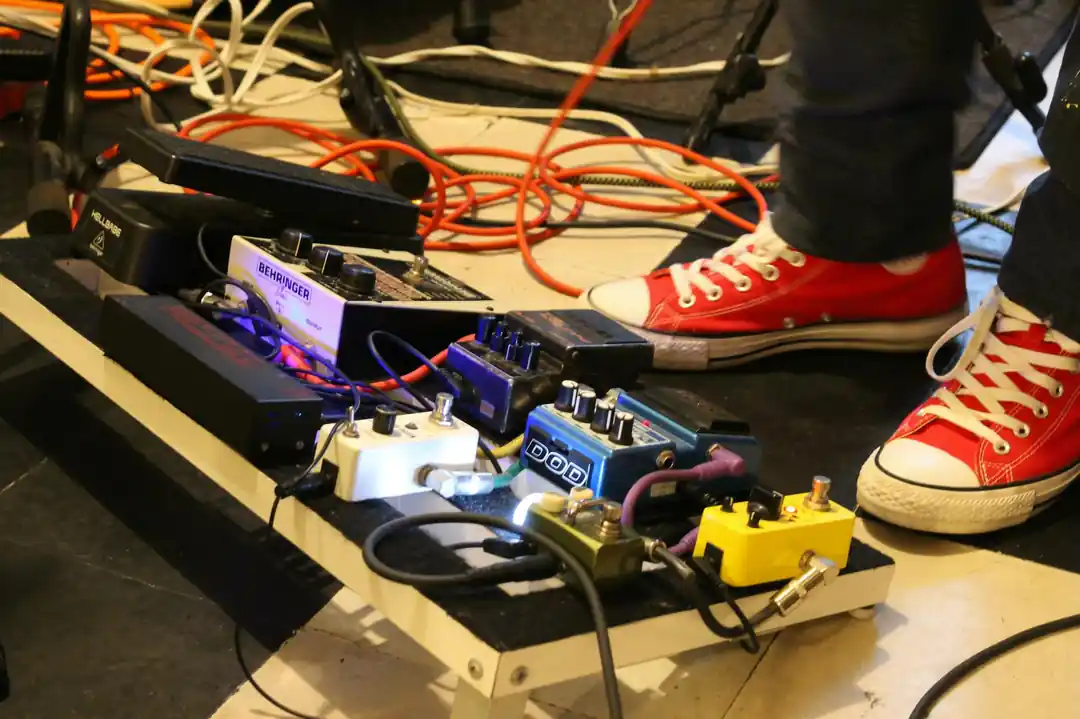

How Does Neural Capture Actually Work? A Deep Dive Into the Quad Cortex's Magic

Ever wondered how the Neural DSP Quad Cortex magically captures the soul of your favorite amps and pedals? Let's dive into the biomimetic AI that makes it all possible.

The Ultimate Guide to Choosing the Best Streaming Service in 2025

Feeling overwhelmed by the endless sea of streaming options? Let's break down how to find the perfect service for your binge-watching needs and budget.

The Unthinkable Cost: How to Fund Your Legal Defense When Your Future Is on the Line

Facing a legal battle is daunting enough without the crushing weight of attorney fees. Let's walk through the real options you have for financing your defense.

Recession-Proof Investing: A Beginner's Guide to Building a Resilient Portfolio

The word 'recession' can be scary, but your investment strategy doesn't have to be. Let's explore some foundational basics for building a portfolio that can weather economic storms.

How to Talk to Your Kids About Climate Change (Without Scaring Them)

It feels like a heavy topic, but talking to our children about climate change is one of the most important things we can do. Here’s how to approach it with honesty, simplicity, and a whole lot of hope.